How can developers write code without actually typing most of it? A workflow called “vibe coding,” coined by Andrej Karpathy in February 2025, offers an answer. This approach replaces traditional line-by-line programming with a prompt-execute-refine loop driven by large language models. Developers specify what they want in natural language, then test and refine the AI-generated results rather than writing code themselves.

Vibe coding transforms development into a conversation with AI, where your intentions matter more than your syntax.

The tooling ecosystem supporting this method includes chat-based LLMs like ChatGPT and Claude, integrated AI code assistants, and platforms that automatically run and test generated code. These tools enable rapid prototyping, MVP development, and allow non-technical creators to build functional applications without deep programming knowledge. The emergence of specialized tools like Cursor Composer has further simplified the generation of code through minimal user interaction. Similar to how B2B integration transforms supply chains by automating processes and minimizing manual errors, vibe coding automates code generation while reducing development overhead.

Industry analyses suggest significant productivity gains, with some studies reporting developers completing tasks up to 56% faster using AI assistants. The approach eliminates much of the boilerplate work that traditionally consumes development time and shortens validation cycles dramatically. Prototypes that once took weeks can now be tested within days or even hours.

However, vibe coding introduces concerning risks. Code produced this way often lacks documented design intent, making long-term maintenance challenging. The generated artifacts may contain:

- Subtle bugs that evade initial testing

- Insecure patterns embedded in seemingly correct code

- Non-idiomatic constructs that confuse future developers

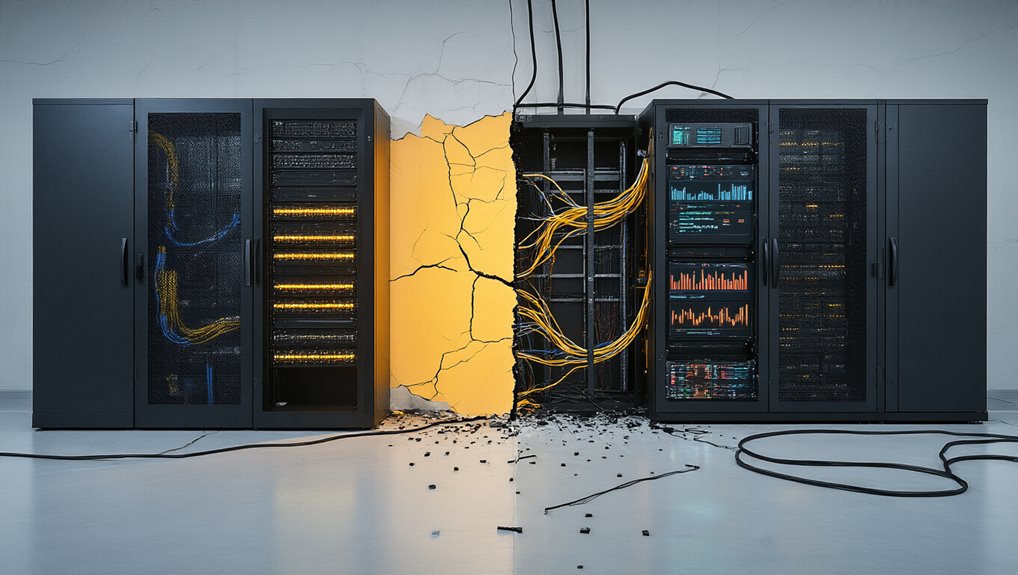

This invisible technical debt accumulates silently, particularly when rapid iteration pressures push teams to skip thorough code reviews and security scanning. Organizations face heightened risk when production systems rely on AI-authored code without personnel who fully understand the core logic. The Y Combinator’s report that 25% of startups in Winter 2025 had 95% AI-generated codebases underscores how prevalent this practice has become.

To mitigate these concerns, teams should implement comprehensive safeguard measures: automatic static analysis, mandatory unit testing, version control for both code and prompts, strict access controls for credentials, and human review checkpoints for security-critical modules. These measures help balance the productivity benefits of vibe coding with responsible software engineering practices that prevent accumulating dangerous technical debt.